Tag: robot

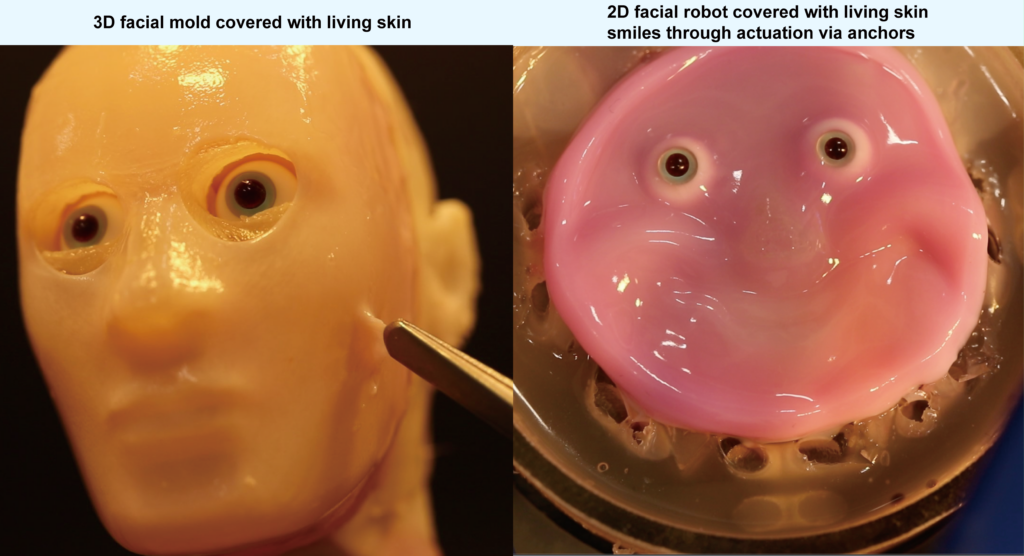

Human skinned robot

Researchers are exploring using livable human skin to cover a mechanical apparatus. Apparently, human skin has interesting properties for robots also.

To demonstrate this concept, they’ve recreated a human face.

“In this study, we managed to replicate human appearance to some extent by creating a face with the same surface material and structure as humans,” said Takeuchi. “Additionally, through this research, we identified new challenges, such as the necessity for surface wrinkles and a thicker epidermis to achieve a more humanlike appearance. […] Of course, movement is also a crucial factor, not just the material, so another important challenge is creating humanlike expressions by integrating sophisticated actuators, or muscles, inside the robot.

https://www.u-tokyo.ac.jp/focus/en/press/z0508_00360.html

Dude, you’re going to have to do better than putting 2 googly eyes on a petri dish to call this a human face. But at least it seems you’re having fun.

Need a push?

Robots won’t steal our jobs, they’ll make us work for them.

(source)

Tentacle robot

This is gross and amazing at the same time.

Acrobot circus

I can’t tell if we’re on the verge of some new kind of circus or if this video unintentionally demonstrates that the power to amaze us will always stand on the flesh side of things.

Quite cool self-build prototype though. Definitely a lot of clown potential.

Crashing at scale

Waymo is voluntarily recalling the software that powers its robotaxi fleet after two vehicles crashed into the same towed pickup truck

Waymo recalls and updates robotaxi software after two cars crashed into the same towed truck

Let’s first look at this curious choice of word “recall” to speak about a software reversion, as it’s more generally used in the industry. It sounds like Waymo had to take out of the street their whole fleet because some software update went wrong, like Tesla had to recall all their cars sold in the US because of non-compliant emergency light design. Waymo didn’t do that. They just reverted the software update and uploaded a patched version. Calling it a recall is a bit of a misnomer and here to make them look compliant with some security practices that exist with regular consumer cars. But that framework is clearly not adapted to this new software-defined vehicle ownership model.

The second most interesting bit here, which to me seems overlooked by the journalist reporting this incident, is a “minor” (according to Waymo) software failure that created two consecutive identical accidents between Waymo cars and the same pickup truck. Read that again. One unfortunate pickup truck was hit by 2 different Waymo cars within the time frame of a couple minutes because it looked weird. Imagine if that pickup truck had crossed the path of more vehicles with that particular faulty software update. How many crashes would that have generated?

The robotaxi’s vision model had not taken into account a certain pattern of pickup truck, thus none of these robotaxis were able to behave correctly around it, resulting in multiple crashes. Which brings the question, should a fleet of hundreds or even thousands of robotaxis run on the same software version (with potentially the same bugs)? If you happen to drive a vehicle or wear a piece of garment that makes a robotaxi behave dangerously, every robotaxi suddenly is out there to get you.

Driving isn’t an autonomous activity

Driverless cars are often called autonomous vehicles – but driving isn’t an autonomous activity. It’s a co-operative social activity, in which part of the job of whoever’s behind the wheel is to communicate with others on the road. Whether on foot, on my bike or in a car, I engage in a lot of hand gestures – mostly meaning ‘wait!’ or ‘go ahead!’ – when I’m out and about, and look for others’ signals. San Francisco Airport has signs telling people to make eye contact before they cross the street outside the terminals. There’s no one in a driverless car to make eye contact with, to see you wave or hear you shout or signal back. The cars do use their turn signals – but they don’t always turn when they signal.

“In the Shadow of Silicon Valley” by Rebecca Solnit

Cute monocycle robot with middle fingers

I love how this little thing balances itself.

They call them arms, but they look more like fingers to me.

Obviously, their creators care about it. It’s so cute how they try to push it off-balance without hurting it.

…but safe

Not sure if it’s intentional, but agile and safe in the same sentence is sure to hit high on search engine confusion, especially with a github website to promote your paper. You’re going to get a ton of hits from webshits with 99 problems but robotdog obstacle-avoidance ain’t gonna be one.

Also, calling something “but safe” is, how do I say it clearly but nicely, shooting yourself in the bearing balls. You’re not going to make me think for one sec that this noisy cocaine high articulated pet is inoffensive.

Looks like you know your classics though. The “robotdog kicking bloopers” are always welcome. You seemed a little too careful though not to hurt the animal, a little too safe?

Who’s programming who?

Illustration, p 27, Petite Philosophie des algorithmes sournois.